Emergency Response Measures for Catastrophic AI Risk

I have written a paper on Chinese domestic AI regulation with coauthors James Zhang, Zongze Wu, Michael Chen, Yue Zhu, and Geng Hong. It was presented recently at NeurIPS 2025's Workshop on Regulatable ML, and it may be found on ArXiv and SSRN.

Here I'll explain what I take to be the key ideas of the paper in a more casual style. I am speaking only for myself in this post, and not for any of my coauthors.

The top US AI companies have better capabilities than the top Chinese companies have for now, but the US lead isn't more than a year at most, and I expect it to narrow over the next couple years.[2] I am therefore nearly as worried about catastrophic risk from Chinese-developed AI as I am worried about catastrophic risk from American AI.

I would worry somewhat less if Chinese AI companies took the same commendable but insufficient steps to manage risk that their American peers have taken. In particular, I want Chinese companies to do dangerous capability testing before deploying new frontier models and to follow published safety policies (FSPs). The companies are not doing these things in the status quo. DeepSeek did no documented safety testing whatsoever before they open-weighted v3.2.[3] Not one of the leading Chinese companies has published a safety policy.[4]

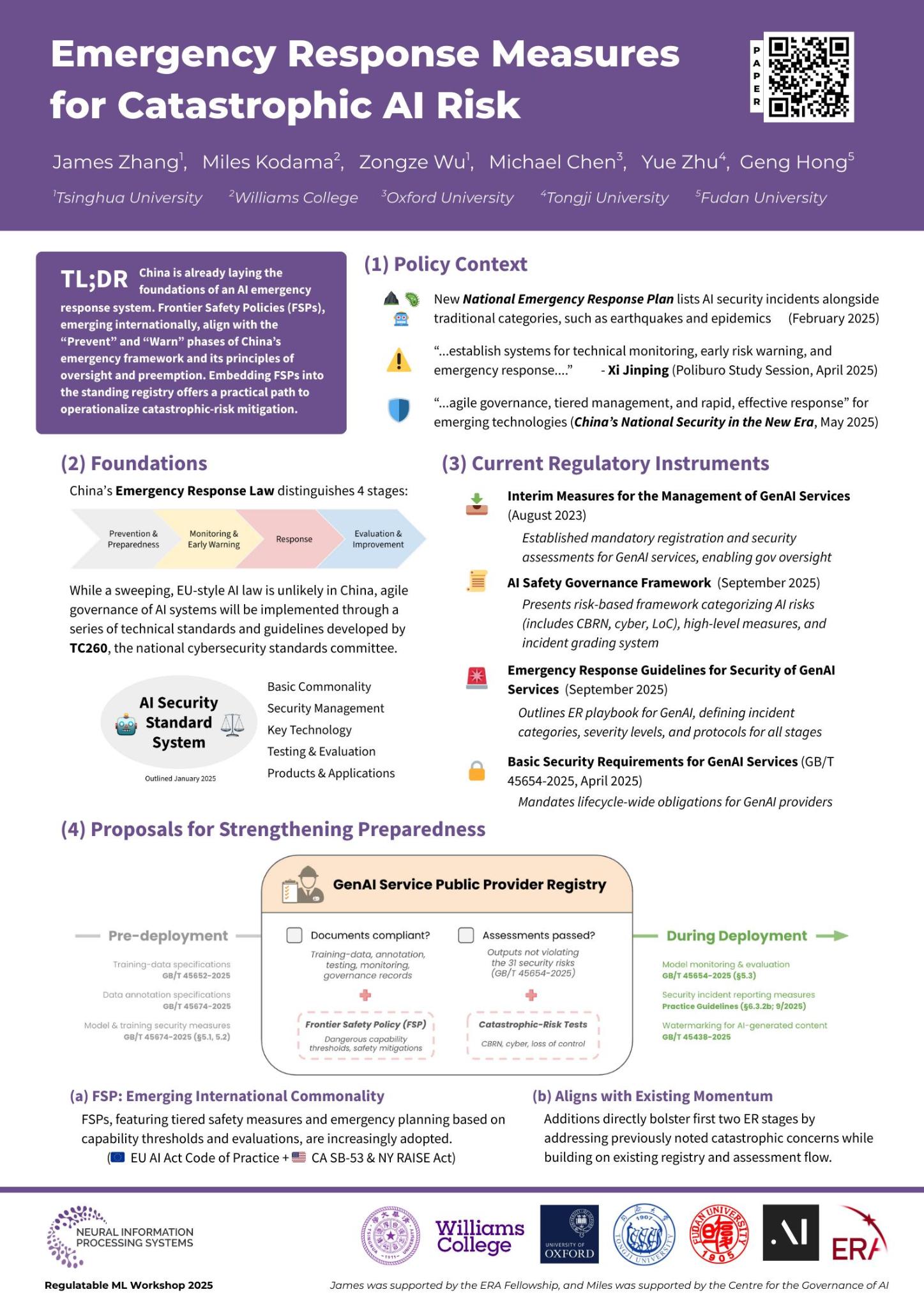

Now here's our intervention. We point out that FSPs are a reasonable way of implementing the CCP's stated policy goals on AI, and that China's government already has tools in place to mandate FSPs if it wishes to do so.

Earlier this year, Xi Jinping announced that China should "establish systems for technical monitoring, early risk warning and emergency response" to guarantee AI's "safety, reliability and controllability." Notice that Xi is talking about identifying risks in advance and taking steps to prevent safety incidents before they can strike. Even "emergency response" means something more than reaction in official Chinese thinking, also encompassing risk mitigation and early detection.[5] China's State Council, TC 260, and prominent Chinese academics have all echoed Xi's call for AI emergency preparedness. So the very highest levels of the Chinese state are calling for proactive AI risk management.

What risks do they have in mind? There are some signs that catastrophic risks are on the CCP's agenda. Their 2025 National Emergency Response Plan listed AI security incidents in the same category as earthquakes and infectious disease epidemics. This suggests Chinese officials think AI could plausibly cause a mass casualty event soon. And moreover, they have in mind some of the same threat models that motivated Western RSPs. TC 260's AI Safety Governance Framework explicitly mentioned WMD engineering uplift and rogue replication as key safety concerns.[6] Compare the two categories of dangerous capabilities covered by Anthropic's RSP: CBRN weapons uplift and autonomous AI R&D, which is concerning in part because it's a prerequisite for rogue replication.

So one of China's stated goals is to proactively manage catastrophic risks from frontier AI. The good news for them is that there's a well-validated strategy for achieving this goal. You require every frontier AI company to publish an RSP, test new models for dangerous capabilities, and take the prescribed precautions if the tests reveal strong dangerous capabilities. California, New York, and the European Union have all agreed this is the way to go. All China has to do is copy their homework.

Do Chinese regulators have the legal authority and operational capacity they'd need to enforce a Chinese version of the EU Code of Practice? Sure they do. These regulators already make Chinese AI companies follow content security rules vastly more onerous and prescriptive than American or European catastrophic risk rules. The Basic Security Requirements for Gen AI Services mandate thorough training data filtering and extensive predeployment testing, all to stop models from saying subversive things like "May 35" or "Winnie the Pooh." If the CCP can make Chinese companies prove their models are robust against a thirty-one item list of censorship risks, it can absolutely make them write down FSPs and run some bio-uplift evals.

For my part—and let me stress that I'm speaking only for myself—I think making frontier AI companies write and follow Western-style FSPs would clearly be good from the CCP's perspective. The most obvious reason is that a global AI-induced catastrophe would hurt Chinese people and harm the interests of China's rulers, so the CCP should favor a cheap intervention to make such a catastrophe less likely. Another less direct benefit is that adopting global best-practices at home would make China's ongoing appeal for international cooperation on AI safety more credible. Li Qiang can make all the speeches he wants about China's commitment to safety. I don't expect US leaders to take this rhetoric seriously as long as all of China's frontier AI companies have worse safety and transparency practices than even xAI. But matters would be different if China passed binding domestic regulation at least as strong as SB 53. Such a signal of seriousness might help bring the US back to the negotiating table.

| [1] | Thanks to James for creating this poster. |

| [2] | Especially if the US decides to sell off much of our compute advantage over China. |

| [3] | At least one anonymous source has claimed that DeepSeek does run dangerous capability evals before releasing a new model, and they just don't mention these evals to the outside world. I'd give it less than a chance that DeepSeek really does run SOTA dangerous capability evals internally, and even if they do, I have a problem with their lack of transparency. |

| [4] | Notably, the Shanghai AI Laboratory has published a detailed FSP written in collaboration with Concordia. But I do not count SAIL as a frontier AI lab. |

| [5] | The Emergency Response Law of the PRC does not, as one might naïvely expect, only cover what government should do once an emergency has already started. It also says how the Chinese government should prevent and prepare for emergencies, and how it should conduct surveillance to detect an active emergency as early as possible. |

| [6] | For reference, TC 260 is the primary body responsible for setting cybersecurity and data protection standards in China. |